I used Claude Code for 10 days after a year of cut-and-paste

Why I finally took everyone’s advice and tried a modern coding tool...and what happened when I did

Disclosure: This article was written by me, a very busy human, and lightly edited by Claude Code. Grammarly made suggestions, but they were summarily ignored.

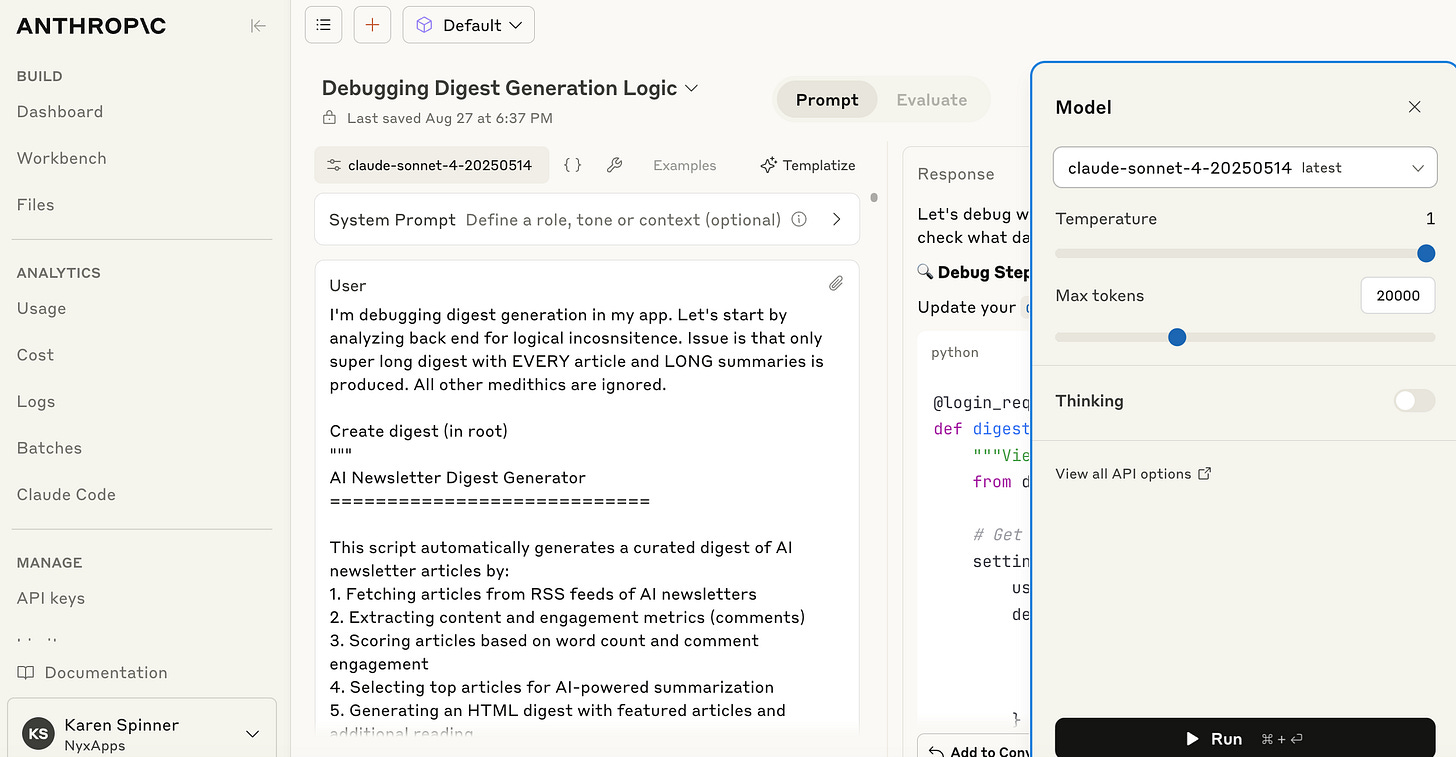

I've been building various tools and even one production-grade app with AI for a little over a year, and until recently, I thought I had my process down pat. I would log onto Anthropic's Console, choose a model and tweak the settings, attach a background doc, and prompt away. I was always careful to do one thing at a time and, when Claude gave me some code, I would paste it into the relevant project files using Sublime Text, check it carefully, and test the result.

While this process was cumbersome and often resulted in deeply annoying indentation errors, it forced me to actually inspect the code and attempt to understand what was going on. Sometimes I would even look up the syntax itself and research all the libraries I was importing. Over time, I developed a better understanding of Python—a language that assigns meaning to whitespace, which means a bad tab or space can break everything—as well as HTML and JavaScript.

I also liked being able to pay for my Console use with my Anthropic API key. Because the amount of time I had available to code was unpredictable, I’d often have a slow month where I’d spend $5 in credits, followed by a heavy month in which I’d drop $50 or more. I felt like the pay-as-you-go model was a good fit for me, and it meant I didn't have to keep track of yet another SaaS subscription.

While I'd heard people raving about Claude Code and Cursor, I was skeptical. I worried about giving models unfettered access to my entire codebase. One hallucination or mistake could easily be propagated everywhere. Sure, I could just use git to drop the problematic code and revert to an earlier state, but what if it was a subtle issue that only showed up in production?

And what about catastrophic data issues? I imagined Claude or GPT making unwanted changes to my data model, which could force me to drop and recreate the database and all my test data.

When I started building my newsletter digest app, I decided to stick with my tried-and-true approach. Sure, it was a little slower, but at least, to me from weeks ago, it seemed a whole lot less risky.

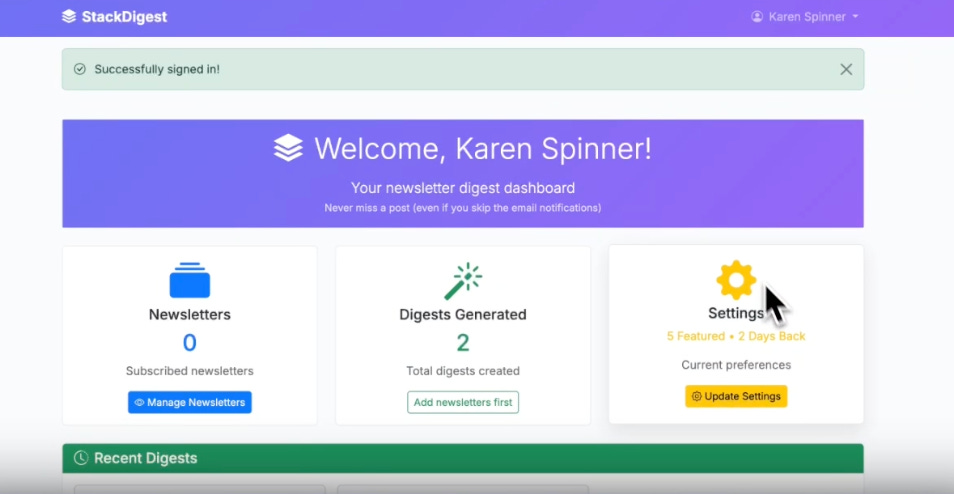

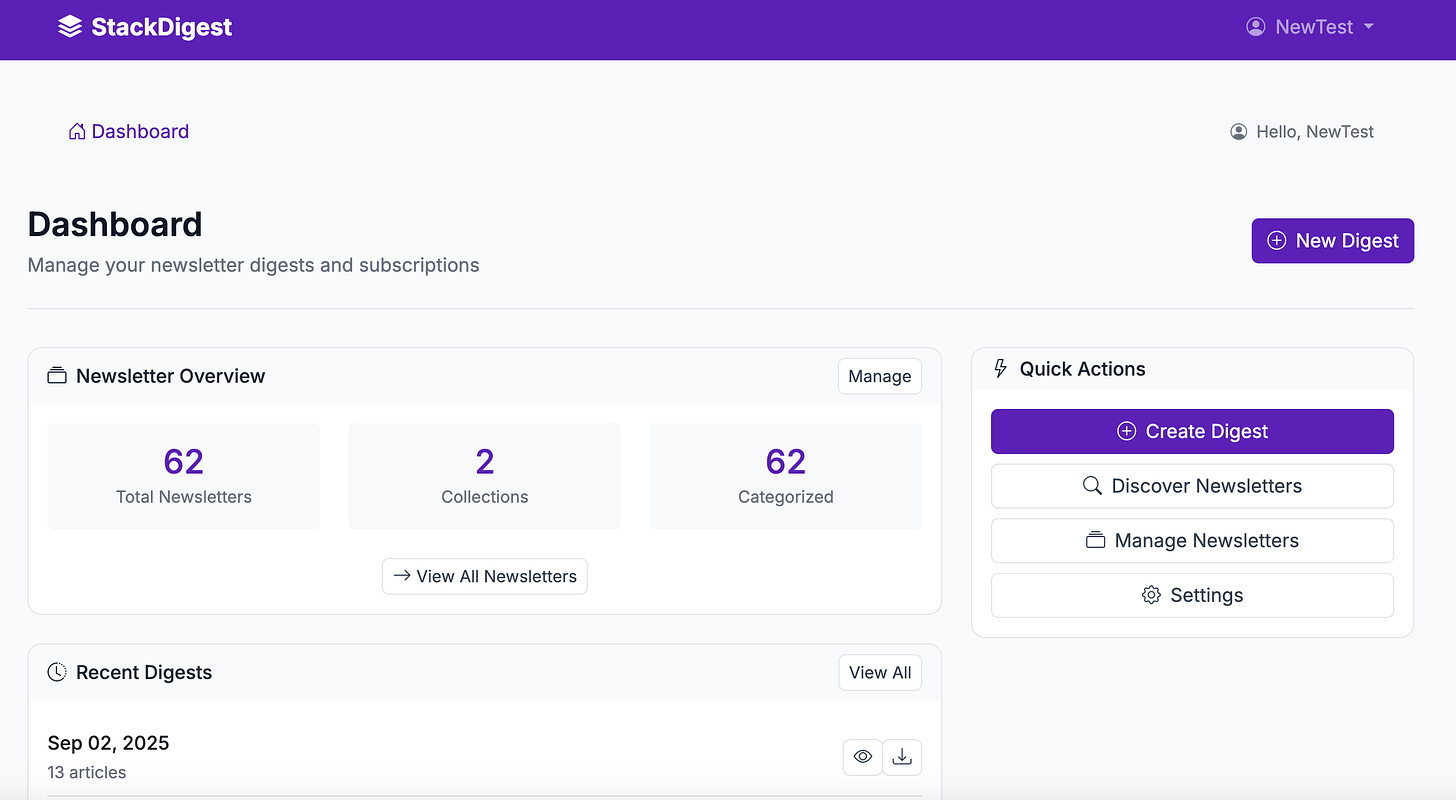

This article is brought to you by StackDigest, a newsletter digest and curation app that helps readers keep up with their subscriptions and publishers add value without writing more.

The project that broke my method

After I built a prototype version of the newsletter digest app and received positive feedback, I decided to keep going. I added new types of digests, user-defined collections, support for very large libraries of newsletters, and more. And with all these features came lots of code. Specifically, lots of Python, CSS, HTML, and JavaScript. I had five separate modules and another on the way.

Explaining the codebase and file structure to "Console Claude" every time I needed to fix a bug or add a feature was getting increasingly time-consuming. As I kept building features, I realized that the refactoring (e.g., rewriting code to be more compact and organized) and UI overhaul I had planned would require global changes that could take weeks using my cut-and-paste method combined with a bit of hand coding.

The cost was becoming another issue. As I spent more time updating my project, I noticed my API costs steadily ticking up. When I enlisted Opus 4.1 to help me with a particularly annoying multi-threaded processing bug, I quickly torched more than $50 in a shockingly short amount of time.

Between the budget and my development schedule, something had to give. I decided it was time to take a chance. The next morning, I signed up for a Claude Max account at the lower tier, which costs $100/month and can be cancelled at will, and installed Claude Code.

Making the switch

Claude Code is a command-line tool that lets you work with Claude from your terminal. Instead of switching between your coding environment and a web browser to chat with Claude, you can ask Claude to make changes directly to your files. In other words, no more cut-and-paste.

In theory, to install Claude Code, you first need to install Node.js 18, which is a runtime environment that lets you execute JavaScript outside of a web browser. Claude Code is built using JavaScript and related technologies, so it needs Node.js installed on your computer to run.

But because I was in a hurry to get started, I skipped the Node install and used Anthropic's native install script (currently in beta), which automatically handles the necessary Node components.

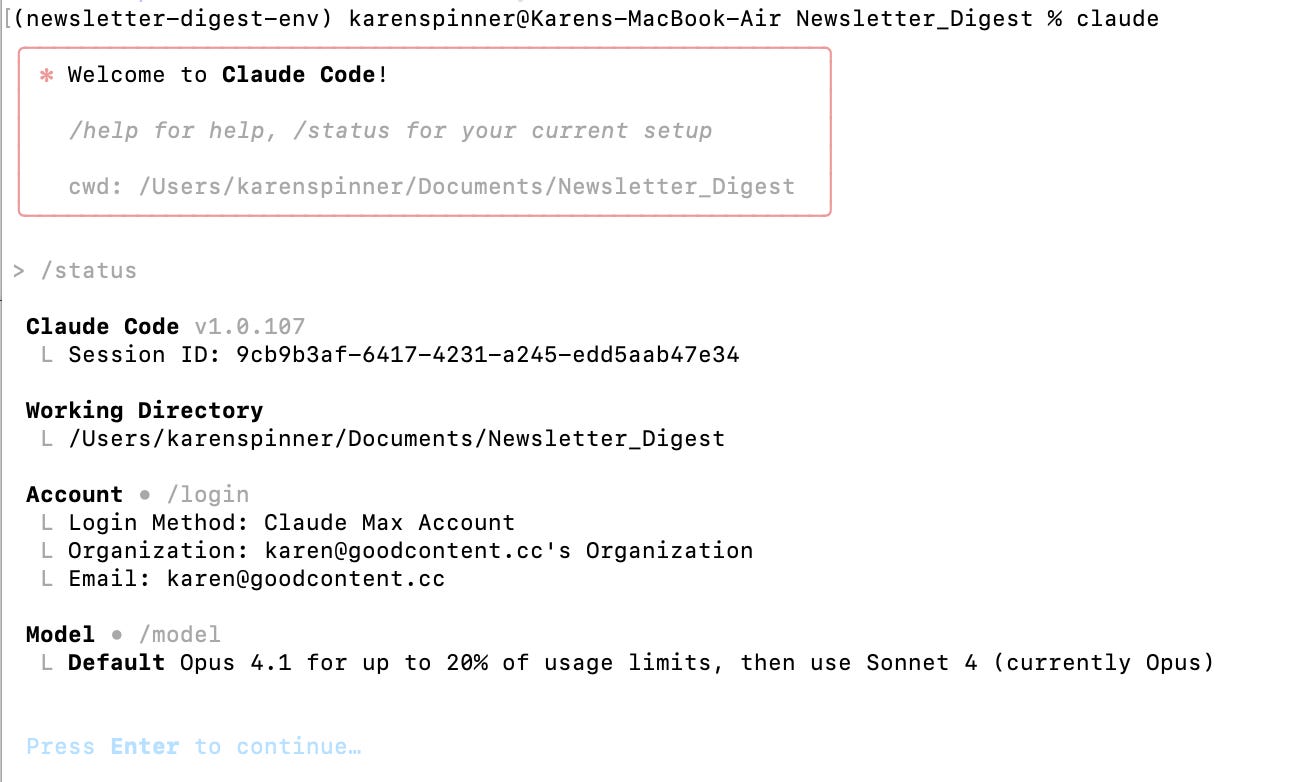

Once Claude Code was installed, I started the virtual environment for my project and navigated to the main project directory. I launched Claude Code and typed in the login command. While you can use Claude Code with your API key or your Claude chat account, I picked the latter, since the former, as previously noted, will burn 🔥 through your cash.

A clean and simple UI

I was a little concerned about working with Claude through the command line, since I was used to the Console, but I found the CLI interface clean and surprisingly straightforward. It uses colors to highlight menu selections, and it's easy to read. The conversational flow feels natural; you type your request, Claude responds, and you can see exactly what files it's examining or modifying in real-time.

Introducing Claude Code to my project

The interface recommended that I ask Claude to explore my project and then warm up with a few simple bug fixes. So that's exactly what I did. I started by asking Claude to review my codebase and then summarize its findings in a readme.md file located in my main project directory.

I watched, vaguely amazed, as Claude inspected files and made notes on screen. Because it's an agent (an AI that can use tools with some degree of autonomy), it could read files, and also open them and make changes. Within a few minutes, it had crawled through my code and written a comprehensive summary of my tech stack, file structure, and core functionality.

Here's a quick snippet from the context doc Claude created:

# Newsletter Digest - Codebase Analysis

## Project Overview

Newsletter Digest is a Django-based web application that aggregates and summarizes newsletter content for users. It fetches articles from RSS feeds, applies AI-powered summarization using Claude API, and generates customized digests based on user preferences.

## Technology Stack

**Backend Framework**: Django 4.2.7

**Database**: SQLite (development), PostgreSQL (production via psycopg2-binary)

**Task Queue**: Celery 5.3.4 with Redis 5.0.1

**AI Integration**: Anthropic Claude API (anthropic 0.61.0)

**Web Server**: Gunicorn 21.2.0

**Authentication**: django-allauth 0.57.0

**RSS Processing**: feedparser 6.0.10

**HTML Processing**: BeautifulSoup 4.12.2

**Deployment**: Heroku (Procfile, runtime.txt configured for Python 3.12)

## Core Architecture

### Main Applications

1. **accounts/** - User authentication and profile management

2. **digests/** - Core digest generation and configuration

3. **newsletters/** - Newsletter subscription and management

4. **core/** - Dashboard and central routing

5. **digest_webapp/** - Django project settings and configuration

Quick tip: At the end of every session, have Claude update your readme.md file with any significant changes or new features added.

Swatting a few bugs

Already pleased with the experience, I began feeding Claude my bug list, one at a time. I started with some broken links on the Digest History page, then had it fix a timestamp error, and finally repair a bookmarklet that wasn't scraping custom Substack domains when it should have. The experience was somewhat eerie: I watched Claude's invisible hand fixing one bug after the next.

I was also relieved that Claude would show its proposed changes onscreen and ask for permission to proceed. I did this for a while and, after reading through many screens, used the shift+tab command to basically accept all of Claude's changes at once when I felt confident.

This all went so well that I decided it was time for something a little more ambitious.

Quick tip: Always have Claude fix one bug at a time. Piling on multiple fixes means it's harder to tease out the issue if (or, let’s be honest, when) something fails.

From magic to random acts of ignorance

Very large newsletter imports, which at the time were handled by a web dyno (the server process that handles user requests in real-time), were sometimes failing. Even worse, the failure would invoke Heroku's purple screen of death, a major interruption to the user experience. So I asked Claude to refactor the import functions so that they would run using a worker dyno instead, which is a background process that can handle long-running tasks without blocking the web interface or crashing the UI even if they fail.

With this more complex task, though, it was like Claude had become a different, less skilled model. It made simple mistakes, like importing the wrong Python libraries and "forgetting" that I had already set up Celery and Redis for other processes. And while the back-end update was made fairly quickly, it also broke the front-end progress tracking and generated reams of malfunctioning JavaScript.

After taking a break and splitting the JavaScript into separate files organized by functionality, I inspected my conversation with Claude. I realized that around the time I started experiencing friction, the Claude model had switched over from Opus to Sonnet because I'd reached my session usage limit.

Quick tip: If you want to see what Claude model you're using, use the /status command. If you want to switch models, you can use the /model <alias|name> command. If you want Claude to plan and not code, use opusplan

Asking Claude for a UX audit and activating plan mode

Once my Opus session limit had reset, I decided it was time to give Claude a new task: a much-needed UI overhaul. But instead of getting started by asking Claude to “clean up” the UI, I wrote a detailed specification and saved it as UI_upgrade.md in my main project folder.

Then I activated planning mode with the command opusplan and asked Claude to review readme.md and the specification doc, explore the code files focused on the UI, and come back with suggestions. Claude did as I requested and provided a recommendations file that also included a development plan.

Once planning was done, I switched to Sonnet, hoping it would perform well with such clear direction. I also asked it to tackle one item at a time and then wait for my approval to proceed. And that worked well. I watched it consolidate messy CSS, zap hard-to-read color combos, and tweak navigation issues. For about 75% of the process, I was able to quickly test and approve changes, all of which were making my UI cleaner with better way finding.

But then there were problems. Claude started deleting JavaScript from HTML pages and forgetting key design elements. In this case, I knew it wasn't the model, since it had been Sonnet all along.

A brush with context rot

While reviewing the chat history, I realized that it was very long. And I remembered how chats in the Console tended to degrade if they carried on for too many rounds. Claude would forget bits of the original instructions or arbitrarily fixate on something small, like a single button. This phenomenon, in which AI chats get worse the longer they go on, is known as context rot, and it can happen even when the model's context window hasn't been fully used.

Once this occurred to me, I asked Claude to summarize progress so far and outstanding issues, and add this info to the spec. Then I used the /clear command to effectively wipe Claude's memory. I restarted the interaction, and things went much more smoothly.

Quick tip: When results degrade, ask Claude for a summary, clear your chat, and start again.

But what about the code?

After a few days of development, I realized something odd, or perhaps insidious. Because I had been relying on Claude Code to actually update my files, I had barely looked at them. I had no idea if they were well-documented or messy or what. I could practically feel my brain atrophying. So I poked around my project files looking for issues.

Mostly, I felt good about what I saw. The code was well documented, not too verbose, and based on my testing, functional. But I also noticed Claude had left a lot of test.py files that it uses to run tests as part of its workflow. While this can help improve the quality of Claude's changes, and some test scripts you may want to reuse, it’s a good idea to ask Claude to clean up test files at the end of every session.

I also decided I should continue learning about Python and JavaScript, and trying to code sometimes without AI. The tool is powerful, but it shouldn't become a crutch that prevents me from understanding my own codebase.

Quick tip: Practice hand coding and keep your brain sharp. Understanding your code is still important, even (or especially?) if you routinely code with AI assistance.

Looking ahead: Subagents and beyond

The transition from cut-and-paste to Claude Code wasn't without its glitches, but the productivity gains have been worth it. While I still have to carefully test and debug Claude’s work, I've dramatically sped up development time and mostly improved the quality of my code.

Overall, the ability to have Claude understand my entire project context, make changes directly to files, and iterate quickly has made my life both easier (I can code so much faster!) and harder (I can build way too many features!). I’m also excited about exploring more advanced features, particularly subagents, which can work on different aspects of a problem simultaneously.

For anyone sitting on the fence about making the switch, I suggest starting small, keeping your git commits frequent, and continuing to practice coding by hand.

Key takeaways

Start with exploration: Let Claude understand your project structure before diving into changes

Fix one thing at a time: This makes debugging easier when something goes wrong

Watch for model switches: Different models have different capabilities and session limits

Combat context rot: Clear conversations and start fresh when quality degrades

Stay engaged with your code: Don't let AI assistance atrophy your programming skills

Use planning mode for complex tasks: A good plan leads to better execution

Clean up regularly: Remove test files and keep your codebase tidy

Was wondering if u update the claude.md frequently? It’s game changer to ensure Claude knows the project in details.

Anyway, love the post!

Really enjoyed the depth and detail of this post. Looking at the code and the files is so important, and also an interesting way to supplement learning how to code. By osmosis.